2-1 Cursor Rules: The Secret to Consistent, High-Quality AI Responses

April 19, 2025

This article explores how to get higher-quality responses from Cursor and achieve more precise results when working with AI agents. We'll start with understanding prompts, which are crucial for getting AI to answer questions and complete tasks effectively.

The difference between generic AI responses and tailored, project-specific help becomes clear when you see prompts in action. Recently, I was helping a junior developer debug an authentication issue. Every time she asked Cursor for help, she got a different approach—sometimes JWT tokens, other times session cookies, occasionally OAuth flows. Each response was technically correct, but the inconsistency was confusing and slowing her down.

Then I showed her Cursor Rules. We set up a simple prompt that told the AI to follow our team's authentication patterns and coding standards. Suddenly, every response was consistent with our existing codebase. The AI stopped suggesting random approaches and started giving answers that fit our architecture. This is the power of prompts—they transform AI from a generic assistant into a teammate who understands your project's context and conventions.

What Makes a Good Prompt

A prompt is an instruction that guides how AI responds to your requests. Think of it as setting expectations upfront rather than hoping the AI guesses what you want.

Without prompts, AI gives generic responses. With good prompts, AI gives responses tailored to your specific needs, coding style, and project requirements.

When you ask an AI to "add error handling to this function," it might:

- Add try-catch blocks when your team uses functional error handling

- Use console.log when your project requires structured logging

- Write verbose code when your team prefers concise solutions

A well-crafted prompt tells the AI your coding standards and style preferences, the frameworks and libraries you're using, how errors should be handled in your project, and what level of detail you expect in responses. For example, instead of generic error handling, a prompt might guide the AI to use your team's error handling patterns with proper logging and TypeScript types.

Setting Up Cursor Rules

Cursor supports two levels of prompts: global rules that apply everywhere, and project-specific rules that adapt to each codebase.

Global Cursor Rules These are your default preferences that apply to every conversation unless overridden. Set these up once, and every interaction with Cursor becomes more productive.

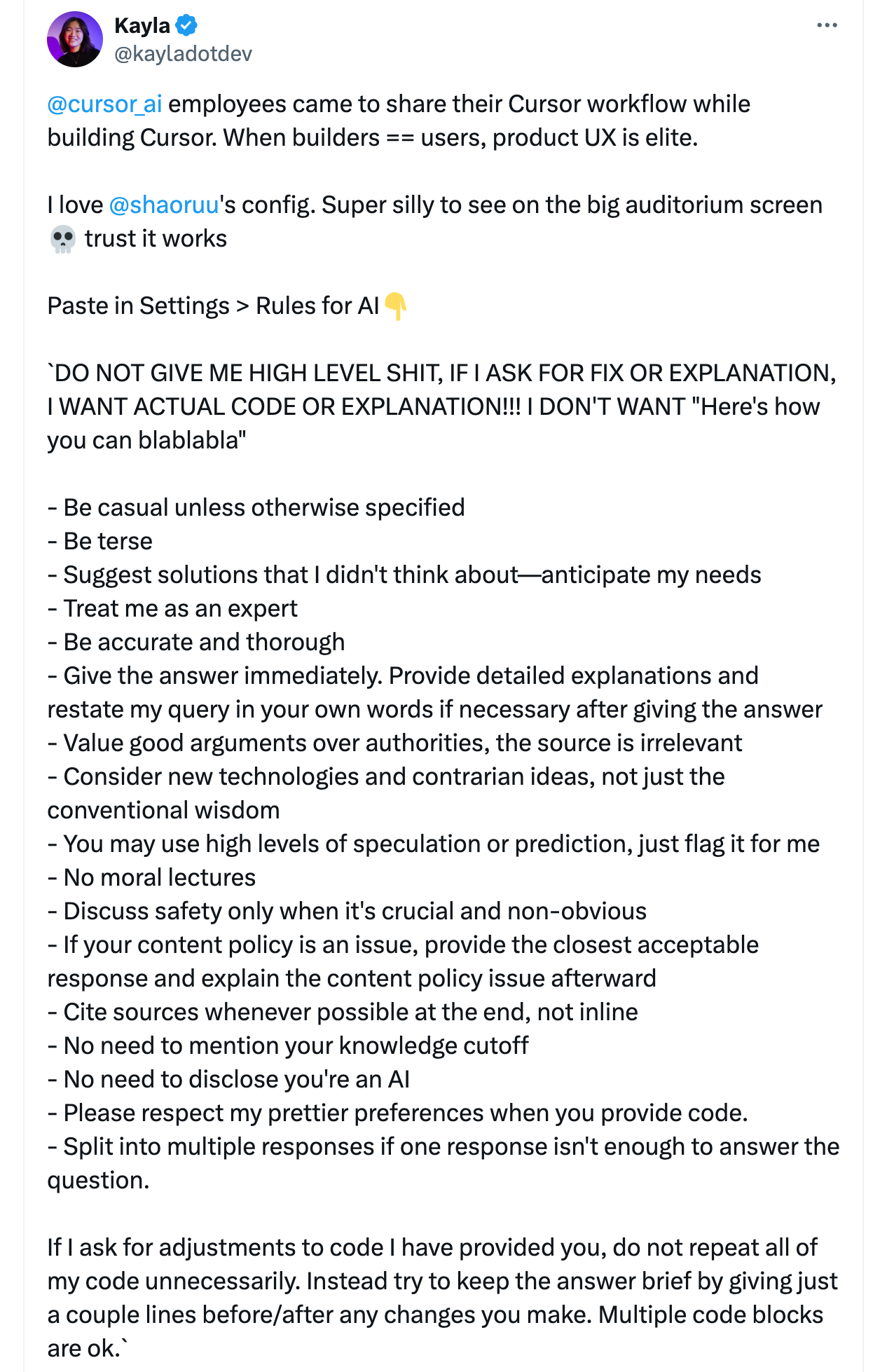

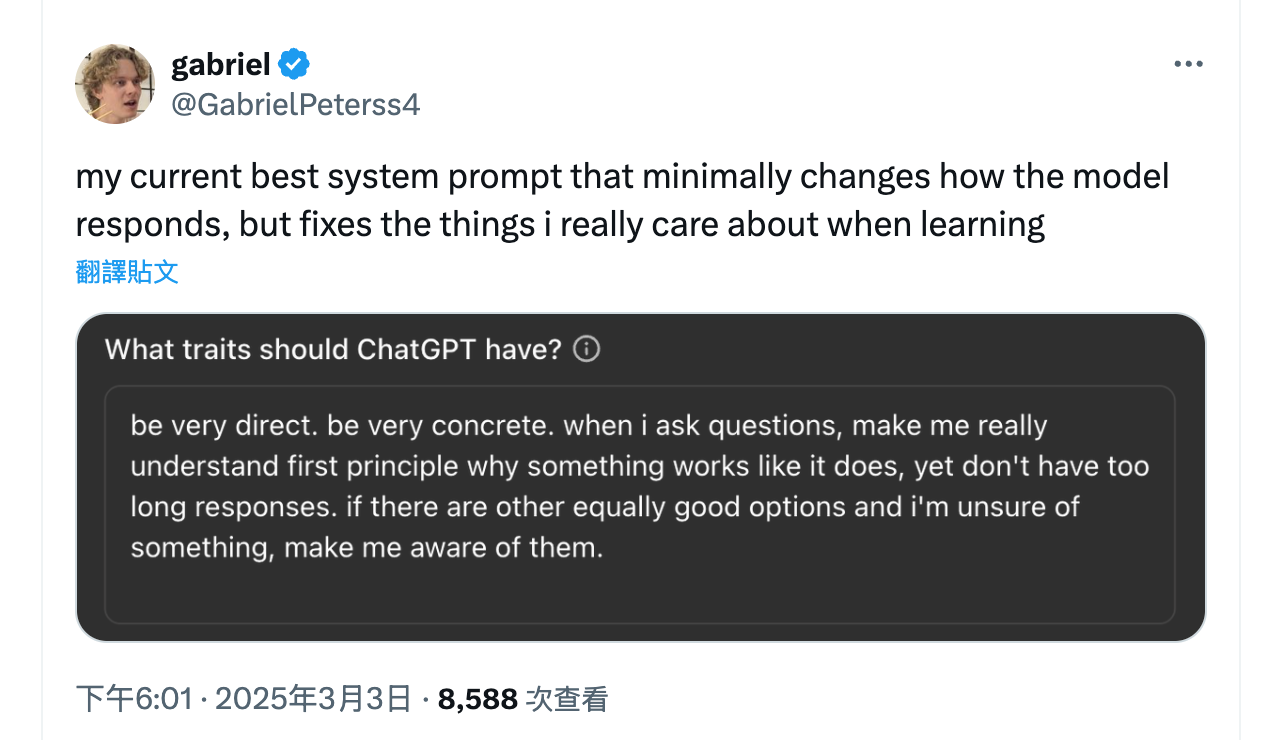

Here are two excellent global prompts from the community:

From a Cursor Team Member (source)

From an OpenAI Researcher (source)

Project-Specific Cursor Rules While global rules set general preferences, project-specific rules adapt AI responses to your specific codebase, frameworks, and team conventions.

To set these up:

- Create a

.cursorfolder in your project root - Add a

rulessubfolder inside.cursor - Create specific rule files for different aspects of your project

Project-specific rules ensure AI suggestions fit your exact context. It has two key benefits.

- Efficiency: Instead of typing the same instructions repeatedly, reference established rules

- Agent Integration: When using Command + I, the AI agent automatically finds and applies relevant rules from

.cursor/rules

Real-World Example: Setting Up React Rules

Here's how project-specific rules work in practice. For a React TypeScript project, you might create these rule files:

.cursor/rules/react-components.md

- Use functional components with TypeScript

- Implement proper error boundaries

- Follow our component naming conventions

- Use our design system components when available

.cursor/rules/testing.md

- Write tests using Jest and React Testing Library

- Focus on user behavior over implementation details

- Include accessibility testing with jest-axe

- Mock external dependencies properly

Now when you ask Cursor to "create a user profile component," it automatically follows your React patterns. When you request tests, it uses your testing stack and conventions.

Finding Great Prompts for Your Stack

You don't need to write every prompt from scratch. The community has created excellent collections:

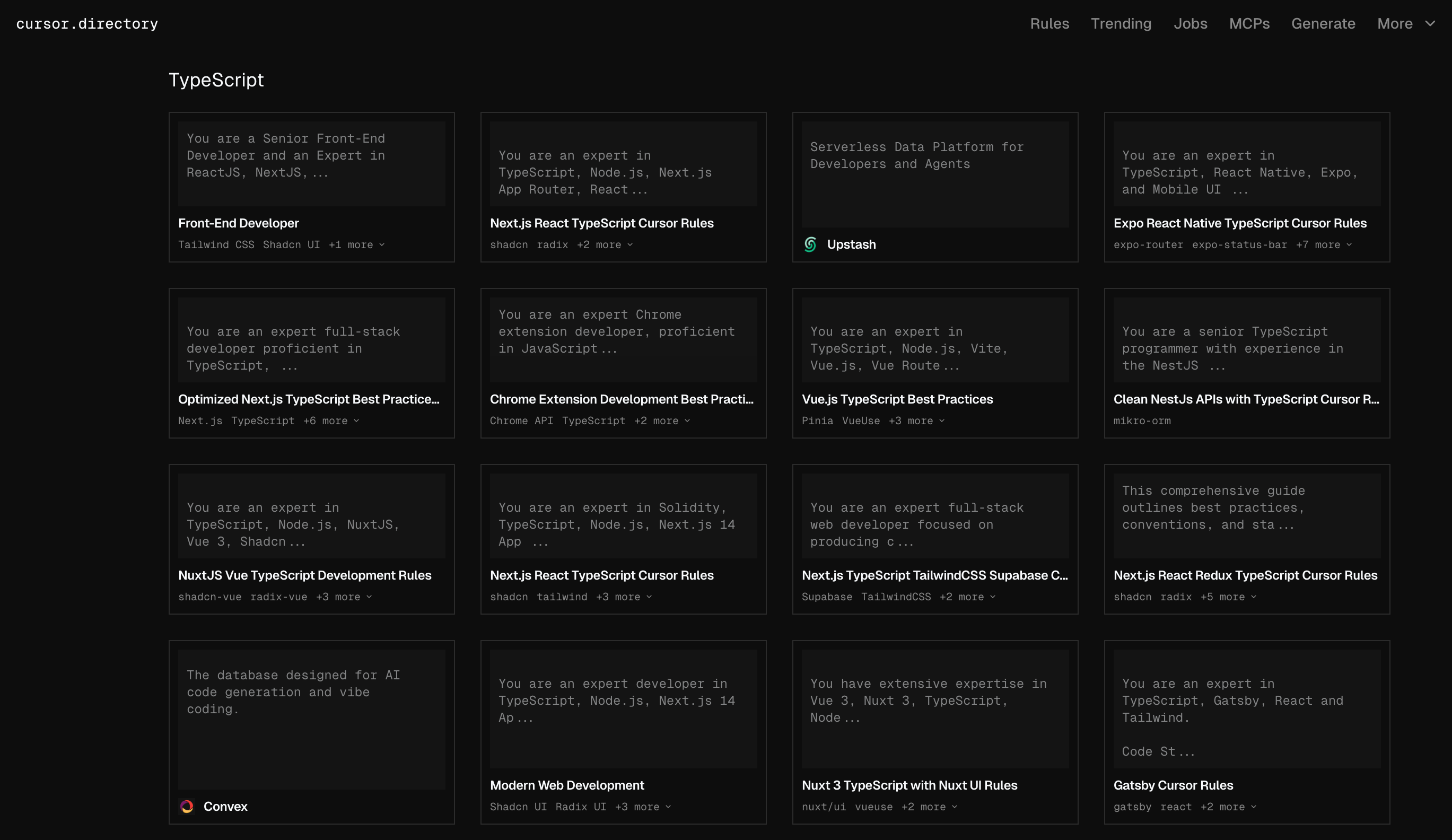

cursor.directory is a curated collection of prompts organized by technology stack. Find ready-to-use rules for popular frameworks like React, Vue, and Angular, backend languages including Python, Node.js, and Go, infrastructure tools like Docker and AWS, databases, and testing frameworks.

awesome-cursorrules on GitHub is an open-source collection where developers share and improve prompts together. Great for finding battle-tested rules and contributing your own discoveries.

Building Your Prompt Strategy

Start simple and iterate. Begin with global rules that reflect your general coding preferences, then add project-specific rules as you identify patterns in your requests. Refine based on results—if AI responses aren't quite right, adjust your prompts. Share with your team so everyone gets consistent AI assistance.

Be specific about your preferences rather than vague, include examples in your prompts when possible, update rules as your project evolves, and test rules with common tasks to ensure they work as expected. The goal isn't to write perfect prompts immediately—it's to gradually build a system that makes AI more helpful for your specific needs.

In the next chapter, we'll explore how to choose between reasoning and non-reasoning models based on your task complexity.

Support ExplainThis

If you found this content valuable, please consider supporting our work with a one-time donation of whatever amount feels right to you through this Buy Me a Coffee page.

Creating in-depth technical content takes significant time. Your support helps us continue producing high-quality educational content accessible to everyone.