4-2 What is MCP? What Problem Does It Solve?

May 12, 2025

In this part of our Cursor guide series, we'll explore a technology that's changing how AI tools connect to external systems. Cursor has just generated perfect code for your new feature, complete with tests and documentation.

You're ready to create a pull request, but now comes the tedious part: manually copying the AI-generated PR description, switching to GitHub, clicking through the interface, and pasting everything in the right places. This workflow break happens dozens of times per day, and it's exactly the problem that Model Context Protocol (MCP) was designed to solve.

What is Model Context Protocol (MCP)?

Breaking down the three components of MCP helps explain its purpose:

- Model: AI models like GPT, Claude, or Gemini that power your coding assistant

- Context: External data and tools that the AI needs to access

- Protocol: A standardized way for these connections to happen

MCP creates a bridge between AI models and external tools, allowing your AI assistant to directly interact with services like GitHub, Jira, databases, and testing frameworks. Instead of you manually moving information between tools, the AI can handle these integrations automatically.

Consider the earlier example of creating a pull request. With MCP, when Cursor finishes generating your code, it can automatically create the PR on GitHub, populate the description with relevant details, assign reviewers based on the changed files, and even link it to the corresponding Jira ticket. The manual copying and switching between tools becomes unnecessary.

Why Do We Need MCP?

You might wonder why we need another protocol when AI models can already call external APIs. The answer lies in understanding how AI models currently handle external integrations and why that approach has limitations.

Before MCP, developers used function calling to connect AI models to external APIs. With function calling, you define specific functions and their parameters, then instruct the AI model how to use them. This works, but it has significant drawbacks.

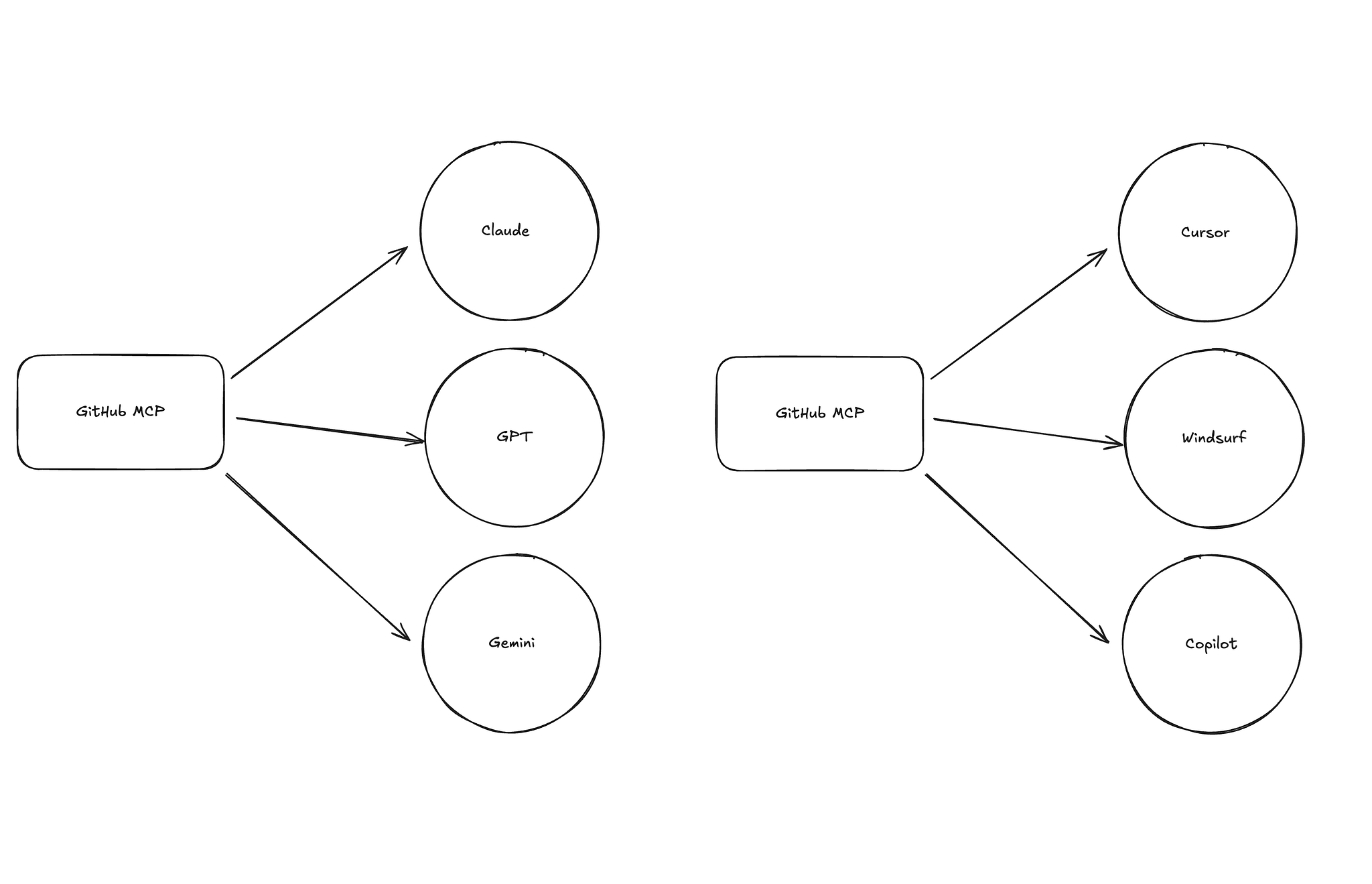

The real problem with function calling is the m×n complexity (where m represents the number of AI models and n represents the number of tools). This means if you have 3 AI models and 5 tools, you need to build 3×5 = 15 separate integrations.

Want to connect GitHub to both GPT-4 and Claude? You need separate integrations for each model. Add Anthropic's new model? You need to rebuild all your GitHub, Jira, and database integrations again. This exponential complexity makes it impractical to build comprehensive tool ecosystems.

The Standardization Solution

MCP solves this by providing a common protocol that works consistently across different AI models and applications. Instead of m×n complexity, MCP reduces this to 1×n complexity. Here's why:

With MCP, you build:

- 1 MCP client: A single client that can work with any AI model that supports MCP

- n MCP servers: One for each tool (GitHub, Jira, Database, Slack, Linear = 5 servers)

- Total: 1 × 5 = 5 integrations instead of 15 separate integrations

The key insight is that the MCP client acts as a universal translator. Once you have one MCP client, it can connect to all n tools through their MCP servers. Your GitHub MCP server can talk to GPT-4, Claude, or Gemini through the same MCP client.

Think of it like USB-C for AI integrations. When Anthropic open-sourced MCP, they specifically mentioned wanting it to become the "USB-C of AI" - a universal connector that works everywhere.

Just as USB-C ended the frustration of carrying different cables for different devices, MCP eliminates the need to rebuild integrations for each new AI model or application. Before USB-C, you needed different cables for your phone, laptop, and tablet. After widespread USB-C adoption, one cable works for everything.

The standardization aspect is crucial. When multiple AI providers like Anthropic and OpenAI support the same protocol, developers can build integrations once and use them across different AI models. This reduces development time and increases the likelihood that useful integrations will be built and shared.

How MCP Transforms Your Development Workflow

Now let's look at concrete examples of how MCP integration in Cursor can eliminate manual tasks from your development workflow.

Automatic Requirement Fetching

Currently, when you want to implement a new feature, you need to copy requirements from your project management tool and paste them into Cursor. With MCP connecting Cursor to Linear or Jira, you can simply tell Cursor: "Implement the feature described in LINEAR-1234." The AI automatically fetches the ticket details, understands the requirements, and begins implementation.

Integrated Testing Workflows

After Cursor generates your code, you typically need to manually run tests and check results. MCP integration with testing frameworks like Playwright changes this completely. Cursor can write end-to-end tests, automatically launch browsers, execute the tests, and even update the code based on test results - all without manual intervention.

Seamless Git Operations

The pull request scenario from our opening example becomes fully automated with MCP. Cursor can:

- Generate code based on your specifications

- Create appropriate commit messages

- Push changes to a new branch

- Create a pull request with detailed descriptions (via GitHub MCP server)

- Add relevant reviewers based on code ownership (via GitHub MCP server)

- Link the PR to the original ticket (via Jira/Linear MCP server)

MCP represents a shift from AI as an isolated coding assistant to AI as an integrated member of your development team, capable of working with the same tools you use but without the context switching overhead that slows down human developers.

Support ExplainThis

If you found this content valuable, please consider supporting our work with a one-time donation of whatever amount feels right to you through this Buy Me a Coffee page.

Creating in-depth technical content takes significant time. Your support helps us continue producing high-quality educational content accessible to everyone.